Arxiv Paper: https://arxiv.org/abs/2301.13430

Source Code: https://github.com/yerfor/GeneFace

OpenReview Discussion: https://openreview.net/forum?id=YfwMIDhPccD

Demo: GeneFace Driven by Singing Voice generated by DiffSinger

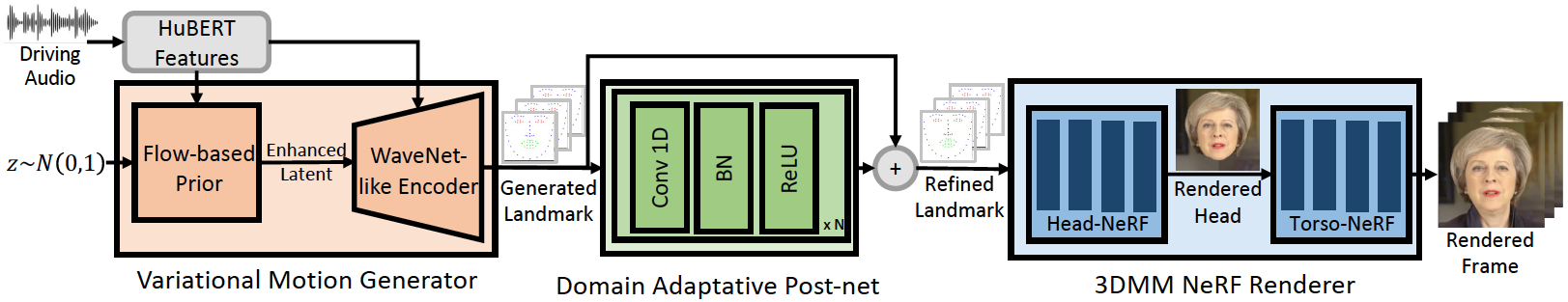

Overall Pipeline

Abstract

Generating photo-realistic video portrait with arbitrary speech audio is a crucial problem in film-making and virtual reality. Recently, several works explore the usage of neural radiance field in this task to improve 3D realness and image fidelity. However, the generalizability of previous NeRF-based methods is limited by the small scale of training data. In this work, we propose GeneFace, a generalized and high-fidelity NeRF-based talking face generation method, which can generate natural results corresponding to various out-of-domain audio. Specifically, we learn a variaitional motion generator on a large lip-reading corpus, and introduce a domain adaptative post-net to calibrate the result. Moreover, we learn a NeRFbased renderer conditioned on the predicted motion. A head-aware torso-NeRF is proposed to eliminate the head-torso separation problem. Extensive experiments show that our method achieves more generalized and high-fidelity talking face generation compared to previous methods.

Demo Video on Submission

[Additional Demo for Rebuttal]

1. We address the unstable problem in the original video via postprocessing the predicted landmark.

We can see that the artifacts in the original video (on the left), such as changing hair and shaking head, are alleviated in the right video.

2. Additional comparison with Wav2Lip and LSP

We can see that the stablized GeneFace has significantly better image quality and video realness than Wav2Lip and LSP.

3. Additional comparison with AD-NeRF

We can see that GeneFace has obviously better lip syncronization performance than AD-NeRF when driven by OOD audios. By contrast, AD-NeRF tends to half-close the mouth, which is identified as the “mean face” problem.

4. Additional ablation studies on the effectiveness of Variational Generator

To evaluate the effectiveness of Variational Generator, we compare VAE+Flow against vanilla VAE and a regression-based model (trained with MSE loss). We use L2 error between the predicted/GT 3D landmarks as the evalutation metric. We can see that VAE+Flow achieves the best performance.